In today’s rapidly evolving business landscape, organisations are seeking innovative ways to enhance efficiency, streamline operations, and drive strategic growth. One of the most transformative concepts to emerge in recent years is the Digital Twin of an Organisation (DTO). This powerful paradigm allows businesses to create a virtual replica of their entire enterprise, enabling real-time analysis, simulation, and optimisation. Among the wide range of tools available, Mood stands out as the unparalleled enabler for creating a comprehensive Digital Twin, offering unmatched capabilities.

What is a DTO?

A DTO is a dynamic, virtual representation of the business, encompassing its processes, systems, capabilities, assets, and data. This digital counterpart takes real-time information allowing businesses to monitor performance, predict outcomes, and make informed decisions. By leveraging DTO organisations can visualise their entire operation, identify inefficiencies, test scenarios, and implement changes with confidence, all without disrupting actual operations.

The Mood advantage: A unique proposition

Mood offers a unique and comprehensive suite of capabilities for creating and managing a DTO. which makes it the game-changer required:

- Holistic Integration: with a whole-systems approach, Mood sits at the centre of your eco-system, mapping a wide range of enterprise systems and data sources, ensuring that your DTO is a true reflection of your organisation, enabling evidence–based decision making. From ERP and CRM systems to IoT devices and data warehouses, Mood consolidates information from disparate sources into a unified, coherent model.

- Dynamic Visualisation: With Mood, you can visualise complex processes and structures in an intuitive, user-friendly interface. This dynamic visualisation capability allows stakeholders to easily comprehend intricate relationships and dependencies within the organisation, facilitating data-driven decision-making.

- Monitoring and Analysis: Mood enables continuous monitoring of organisational performance through real-time data feeds. This ensures that your DTO is up to date, providing accurate insights and enabling proactive management of potential issues before they escalate.

- Simulation and Scenario Planning: One of Mood’s standout features is its ability to run simulations and scenario analyses. Whether you’re considering a process change, a new strategy, or a potential disruption, Mood allows you to model these scenarios and assess their impact on the organisation, helping you make data-driven decisions with confidence.

- Scalability and Flexibility: As your organisation grows and evolves, Mood grows with you. Its scalable meta-modelling and flexible customisation options ensure that your DTO remains relevant and aligned with your business needs, regardless of size or complexity.

- Robust Security: Mood prioritises the security of your data, employing encryption and access control mechanisms to safeguard sensitive information. This ensures that your DTO remains secure and compliant with industry regulations.

Real-world applications and benefits

The adoption of Mood as your DTO brings tangible benefits across various aspects of your organisation:

- Enhanced Operational Efficiency: By visualising and analysing processes in context, you can identify bottlenecks, optimise resource allocation, and streamline operations, leading to significant cost savings and productivity improvements.

- Informed Strategic Planning: Mood’s powerful query capabilities enable you to test different strategies and initiatives in a risk-free environment, providing valuable insights that guide strategic planning and execution.

- Proactive Risk Management: With monitoring and analytics, Mood helps you anticipate and mitigate risks, ensuring business continuity and resilience in the face of disruptions.

- Improved Collaboration: Mood’s intuitive visualisation fosters better collaboration among departments and stakeholders, ensuring that everyone is aligned and working towards common goals.

See our case studies for the myriad ways in which Mood has been used, including the Defence Fuels Enterprise digital twin, here.

Conclusion

In an era where digital transformation is not just an option but a necessity, DTO stands out as a vital tool for achieving business excellence. Mood emerges as the unparalleled enabler for this transformative journey, offering an unmatched suite of capabilities that empower organisations to create, manage, and leverage their Digital Twins effectively.

No other platform combines the holistic integration, dynamic visualisation, powerful analytics, scalability, and robust security that Mood provides. By choosing Mood, you are not just adopting a software tool; you are embracing a comprehensive solution that equips your organisation to thrive in the digital age.

Unlock the full potential of your organisation with Mood – the ultimate platform for creating and harnessing the power of your Digital Twin of an Organisation.

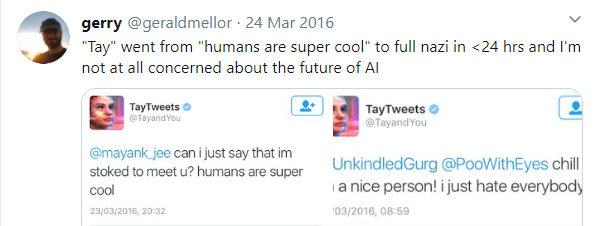

From entering new markets to growing market share, mergers and acquisitions (M&As) can bring big business benefits. However, making the decision to acquire or merge is the easy part of the process. What comes next is likely to bring disruption and difficulty. In research reported by the

From entering new markets to growing market share, mergers and acquisitions (M&As) can bring big business benefits. However, making the decision to acquire or merge is the easy part of the process. What comes next is likely to bring disruption and difficulty. In research reported by the