As cybersecurity threats loom large, it’s critical that organisations consider the security of their software from the outset.

Static Application Security Testing (SAST), Dynamic Application Security Testing (DAST) and Software Composition Analysis (SCA) are three essential methodologies that can be used to identify vulnerabilities in software before it is shipped. Each plays a vital role in an organisation’s robust security strategy, offering unique benefits and complementing one another to safeguard applications throughout the development lifecycle. With this in mind, how does each tool impact software security, and how can they help your organisation bolster its security testing capabilities?

What is SAST, DAST, and SCA?

SAST (Static Application Security Testing)

SAST involves analysing source code, bytecode or binaries without executing the programme. It is typically performed early in the Software Development Life Cycle (SDLC), helping developers catch vulnerabilities during the development phase. SAST is like reviewing a blueprint before constructing a building— it identifies flaws in the underlying structure.

DAST (Dynamic Application Security Testing)

In contrast to SAST, DAST focuses on running applications in a live environment to find vulnerabilities in the application’s runtime behaviour. It simulates attacks to detect issues that might not be apparent in static analysis, such as input validation errors or authentication weaknesses.

SCA (Software Composition Analysis)

Software Composition Analysis (SCA) is a methodology and set of tools used to identify and manage open-source components within software applications. It scans the codebase to detect third-party and open-source libraries, frameworks, and packages. SCA tools analyse these components to ensure they meet security, license compliance, and quality standards.

Benefits of SAST

Early detection of vulnerabilities

- SAST identifies security flaws during the development stage, saving time and reducing the cost of fixing vulnerabilities later.

Automated and scalable

- Modern SAST tools integrate seamlessly with CI/CD pipelines, providing automated scans that can scale with the development team’s needs.

Improved code quality

- Beyond security, SAST also aids in improving overall code quality by identifying potential logic errors, dead code, or inefficient patterns.

Compliance

- SAST helps ensure compliance with regulations and standards like PCI DSS, GDPR, and OWASP, which mandate secure coding practices.

SAST tools CACI uses to support customers

- SonarQube – Offers detailed code analysis (vulnerabilities, code bugs, and smells) and security vulnerabilities which integrates with various CI/CD tools.

- Checkmarx – Specialises in detecting vulnerabilities in source code and includes support for multiple programming languages.

- Fortify Static Code Analyzer – Comprehensive in identifying vulnerabilities across a wide range of programming languages.

- Veracode Static Analysis – Offers a cloud-based platform for static code scanning, emphasising compliance and risk assessment.

- SpotBugs – A successor to FindBugs, this is an open-source static code analyser which detects possible bugs in Java programmes.

- Potential errors are classified in four ranks: (i) scariest, (ii) scary, (iii) troubling and (iv) of concern.

Benefits of DAST

Runtime vulnerability detection

- DAST identifies issues such as SQL injection, cross-site scripting (XSS) and other runtime vulnerabilities that static analysis might miss.

Real-world simulation

- By emulating real-world attacks, DAST provides insight into how an application performs under adversarial conditions.

Technology agnostic

- Since it doesn’t rely on source code, DAST can test applications regardless of the underlying technology stack.

Post-deployment assurance

- DAST can verify the security of applications in production environments, ensuring that deployed applications remain secure.

DAST tools CACI uses to support customers

- OWASP ZAP – Open-source tool favoured for its user-friendly interface and active community support, and identifies vulnerabilities as listed in the OWASP Top 10.

- Burp Suite – Widely used by security professionals for its advanced penetration testing capabilities.

- Netsparker – Known for its automation features and ability to identify vulnerabilities with minimal false positives.

- AppSpider – Tailored for dynamic testing of modern web and mobile applications.

Benefits of SCA

Security management

- SCA identifies known vulnerabilities in open-source components using databases like the National Vulnerability Database (NVD) which link vulnerabilities to the Common Weakness Enumeration (CWE) system that categorises weakness in software and hardware.

Licence compliance

- Ensures associated software libraries and dependencies adherence to open-source licences (e.g., MIT, GPL, Apache) and helps avoid legal issues related to non-compliance.

Risk management

- Improves visibility into the software supply chain, ensuring third-party components are secure and compliant, and can provide detailed reporting (akin to a bill of materials) for audits and governance processes.

Popular SCA tools CACI uses to support customers

- Snyk – Developer-centric SCA tool focusing on security vulnerabilities and licence compliance and integrates with development environments and CI/CD pipelines.

- Black Duck – Comprehensive SCA tool for open-source security and licence compliance management, providing policy enforcement and vulnerability scanning.

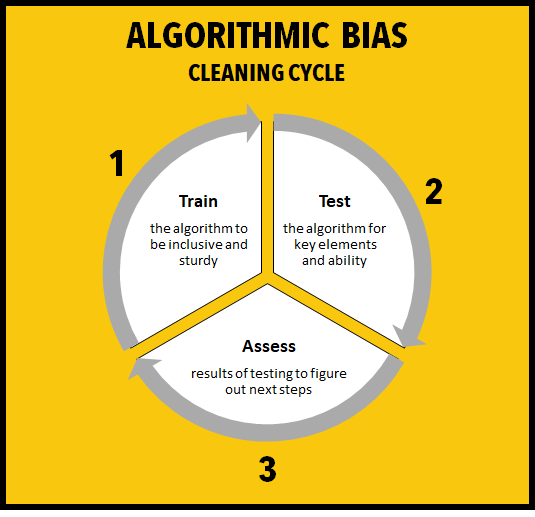

Understanding the synergy of SAST and DAST

While SAST and DAST offer distinct advantages, combining them creates a powerful defence against vulnerabilities. SAST addresses issues at the code level, preventing bugs from propagating into production, while DAST uncovers runtime vulnerabilities that static analysis cannot detect. Together, they provide comprehensive coverage, reducing the attack surface and ensuring a secure software ecosystem. For example:

- SAST might detect unvalidated user inputs during code review, while DAST confirms whether input validation issues could lead to SQL injection when the application is running.

- SAST can identify insecure cryptographic practices, whereas DAST tests whether those practices are exploitable in a live environment.

Benefits of implementing SAST/DAST/and SCA together

Holistic security coverage

- The combined approach tackles vulnerabilities from both the development and runtime perspectives.

Cost and time efficiency

- Detecting and fixing vulnerabilities at different stages prevents costly post-deployment fixes and potential breaches.

Increased trust and compliance

- Organisations gain confidence in their applications by assuring customers and stakeholders of their commitment to security.

Proactive security culture

- Incorporating all three methodologies fosters a proactive approach to cybersecurity, embedding it as a core principle of the SDLC.

How CACI can help

SAST, DAST and SCA are indispensable tools in a comprehensive application security strategy. By addressing vulnerabilities at different stages of the development lifecycle, they significantly reduce the risk of cyberattacks, enhance software reliability and ensure compliance with security standards. By leveraging several SAST/DAST/SCA tools, organisations can secure their applications and build a robust foundation of trust with their users.

At CACI, we integrate SAST, DAST, and SCA into our software development and deployment workflows, creating a layered defence that keeps vulnerabilities at bay while enabling continuous delivery of secure, high-quality software. To learn more about how we can help your organisation enhance its security testing and application efforts, contact us today.

From entering new markets to growing market share, mergers and acquisitions (M&As) can bring big business benefits. However, making the decision to acquire or merge is the easy part of the process. What comes next is likely to bring disruption and difficulty. In research reported by the

From entering new markets to growing market share, mergers and acquisitions (M&As) can bring big business benefits. However, making the decision to acquire or merge is the easy part of the process. What comes next is likely to bring disruption and difficulty. In research reported by the