Call it Secure Access Service Edge (SASE), call it Secure Services Edge (SSE), call it Zero Trust Network Architecture (ZTNA), even call it the Service Edge). You might be forgiven for thinking talk about VPN is everywhere at the moment, and wondering why everyone is Cloudwashing what you’ve known as remote access VPN for many years.

The answer, bluntly, is that they aren’t— the modern business application landscape has vastly changed, and remote access needs to change with it.

Deep dive into the history of SASE & SSEs

The time was when all your business applications would be on-premises (on-prem) and you’d sometimes need to grant employees access from home. Let’s say you’re a typical enterprise shop with:

- A few on-premises data centres from various UK managed services providers (MSPs), or even colocation (Colo) providers

- A few thousand employees scattered across the UK

- A hundred or so business applications

- A few internet gateway data centres with web proxies, reverse proxies and some diverse Direct Internet Access (DIA) connectivity

- Two hundred or so branch/office locations across various UK towns, cities and the odd factory/out-of-town location

- Dipping your foot into public cloud providers such as Amazon AWS, Microsoft Azure and Google Cloud (GCP)

Your environment might’ve looked a little like this:

Dissecting the Current Mode of Operation (CMO)

Most of your users were in the office most of the time, and when they needed to, they would use their VendorCo VPN client to dial into https://vpn.yourco.com which would non-deterministically load balance between the Manchester VPN concentrator and the London VPN concentrator. This happened with complete disregard of where the user was geographically located (“Look, it was too hard to explain to the MSP what Anycast DNS is, OK?”). Their productivity files (Word Documents, Excel Spreadsheets, etc) were mostly still on-prem and located on file servers in their usual office locations; nearly all the remaining 80% of the business support system (BSS)/business applications they need to perform their roles were also in your BSS data centres.

You were running some proof of concept (PoC) work in the public cloud/cloud service providers (CSPs) but weren’t too sure if this would really take off in Big Enterprise or suit your needs. Most of your business apps had a good heritage and came from a time before React/Vue/Node JavaScript Frameworks existed, and largely before it was thought that a Web UI could be used for anything useful. You’ve got many Fat Clients, Oracle and IBM middleware layers, and people generally accept that your ERP application looks like someone threw up with a paint can and flunked out of Data Input class in college (“Checkboxes? Dropdowns? Multi-selects? Has this Vendor never heard of Input Validation!”).

Add in some forward (Web) proxies because Facebook won’t read itself (and you have some legitimate web applications your employees need to get to). Sprinkle on some reverse proxies because you had some applications which – while hosted in your BSS/backend data centres (that weren’t natively setup to be internet-accessible) – over the years you’ve found suppliers, systems integrators (SIs) and partners all need access to them. Sadly, these apps didn’t generally have a notion of an API, so you’ve had to set up a reverse proxy or two in your internet gateway data centre to allow inbound internet access to them from other systems (M2M).

Most of your staff thought the remote access VPN was clunky, slow and cumbersome to launch (“Ever noticed https://internal-intranet doesn’t load on the VPN if you left Internet Explorer open before you took your laptop home for the day, off the VPN?”), but because they rarely used it, they would quietly tolerate the issues it posed. You thought it was nice and secure because you only had two entry points into your network via the WAN.

COVID-19 Mode of Operation

Things rapidly change during lockdown. Suddenly, most of your users are firmly working from home and struggling to use your remote access VPN because:

- It doesn’t have the bandwidth

- It’s slow to load (your Scottish users are going via London; your Watford users are going via Manchester; you lament not putting in Anycast DNS or Geo-based DNS GSLB)

- It’s backhauling everything through two overloaded internet gateways (which have their DIA and MPLS pipes in a constant state of “on fire” in terms of network capacity utilisation)

- It’s not allowed through half the MSP firewalls/ACLs you have on your on-prem environment (“I thought the VPN was 10.99.0.0/24; when did they change it to 10.98.0.0/23 on us? It’ll take weeks to raise these ITIL firewall change requests with MSPCo to get it done!”)

- Your lesser-technical staff don’t realise they have to enable it to access some on-prem applications and disable it to access the applications you’ve quickly lift-shifted to public cloud.

In short, it’s not really working out for you. But can you blame it? Remote access SSL/IPsec VPN comes from an era before cloud distributed computing existed; it’s a moat expecting there to be a castle where everything is inside. Ditto, because the applications of its era operated at the lower levels of the OSI Model, it needs to give your client a network-level (Layer 3) IP address and effectively act as an extension of your corporate WAN “just in case” the business application in question needs that functionality to work.

It’s a solution no longer fit for the current application landscape of public cloud, commodified applications and geo-distributed workloads.

The 80/20 has flipped— now, more of your business applications have found themselves outside your Network Edge castle and beyond the security afforded by your moat. The drawbridge is firmly down, and the people are fleeing your castle-and-moat WAN architectures.

Assessing the Future Mode of Operation (FMO)

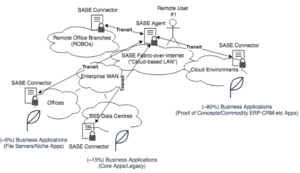

Let’s level-set a bit – you‘re still a Big Enterprise, so digital transformation isn’t going to be quick. You’ve still also got legacy and on-premises workloads like mainframes that can’t move to the public cloud for several sensible financial, compliance and business reasons. However, you’ve now started to (more aggressively than you’d like to thanks to the Covid-19 pandemic):

- Move commodified applications (i.e. all the apps that were never specific to YourCo PLC in the first place— collaboration, document hosting, ERP and CRM, etc.) to the public cloud.

- Typically, as a software as a service (SaaS) offering (in which case it may as well not exist to your WAN/public internet; “You only need a web browser and internet connection to access it”).

- Sometimes, as something cobbled together with a hybrid of SaaS and platform as a service (PaaS) because of a niche workload requirement you have (but it’s still likely to bias for at least the front door of the app to be “Just access with your web browser and internet”, even if the backend/M2M interaction doesn’t).

- Deploy SASE connectors (i.e. Zscaler ZPA Connectors) as close to the applications as possible, and in multiples (unlike the SSL-VPN concentrators you only had a few of)

- Deploy SASE connectors into your branch offices

- Deploy SASE connectors (or accept the native-internet front door into) your public cloud-hosted apps

You’ve come to terms with the fact that most of your staff work well remotely, and that the “full time return to office” is unlikely to happen. Your staff are much happier using the SASE (i.e. Zscaler, Cato Networks, Cloudflare, Netskope), and unexpectedly, your DIA and MPLS pipes are actually quieter because of this.

Your security posture has improved, and you’ve found that you have less perimeter breaches, but you’re not too sure why. You also find that users report the same legacy applications (that you’ve not touched since pre-pandemic) are more performant when they’re at home than on the remote access VPN prior.

So, what’s the momentous change that’s happened then? Why are most things much better, it’s just SSL-VPN tunnels, right?

SSE is not an OSI layer 3 network extender

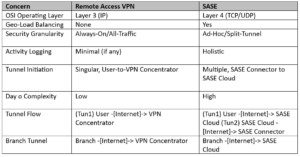

To understand the performance and security gains that an SSE with ZTNA brings to the table, we need to compare what a SASE/SSE is doing versus what a traditional remote access VPN is doing. It’s perhaps easier to see this in a comparable format:

By far, the main difference in the SASE model is that of the cloud-based LAN. It’s the “magic” that stitches together multiple SSL tunnels to allow the solution to efficiently only use the SSL tunnels required for a given end-to-end flow, rather than having to “waste” internet gateway DIA/MPLS bandwidth for a flow that may not be to/from an on-prem system or location. This is also what supplies the main benefits of SASE over remote access VPN as highlighted above, as the cloud-based LAN acts as the “piggy in the middle” (MITM) to any given application flow, and can therefore enforce security, bandwidth and other controls at higher levels of the OSI Model than a layer 3-constrained remote access VPN can.

When compared to the business application world, which is doing mostly the same thing (the cloud is the “world’s computer” and has multiple attachment points/PoPs that are regionally close to the user as the centre of the universe; not focused on the app as the centre of the universe), SASE can be seen as a better fit. In much the same way the public cloud uses global points of presence (PoPs) to lessen the latency of an application and serve it as close to the user as possible, so too does a SASE use the closest “VPN concentrator” to a given user. The OpEx-driven model allows a SASE provider to do this cost-effectively for you as a singular customer, whereas trying to build your own “globe-spanning cloud LAN” would cost significantly more outlay than you may be able to afford. Therefore, using the SASE provider’s reach and expertise can only ever make sense over a more cumbersome remote access VPN.

SASE is doing to the enterprise WAN what SD-WAN did to the enterprise MPLS Network or the Enterprise MPLS Network did to the Enterprise Leased Line Mesh that preceded it— abstracting away point-to-point SSL tunnels into a fabric of dynamically-run, point-to-multipoint SSL tunnel flows that are created and destroyed on-demand.

How CACI can support your move to SSE or SASE?

At CACI Network Services, we’ve seen countless customer environments – from heritage, through legacy into microservices modernity – and innately understand the architecting, deploying and optimising of a variety of SSE and SASE security access solutions.

Get in touch and let us help you untangle the complex web of secure access into your Network Edge and demystify the web of Zero Trust for your WAN.