In our rapidly evolving world, leveraging cutting-edge technologies is no longer a luxury, but a necessity, and Natural Language Processing (NLP) stands out as one of the most transformative tools available. NLP focuses on the interaction between computers and human language, this is commonly seen in systems such as Large Language Models (LLMs), Interactive Voice Response systems (IVRs), and voice assistants. These technologies have the power to revolutionise a company’s service by making interactions more efficient and effective, whilst reducing costs, so why haven’t more companies harnessed them?

Let’s consider customer service – an area where the technology has already made significant strides. Many businesses still have systems that heavily rely on human operators, requiring them to tackle customer calls with highly specific and complex issues. Implementing new NLP systems can lessen the reliance on these human operators, leading to decreased wait-times, improved efficiency, and 24/7 availability. However, these systems often come with significant costs and require substantial infrastructure changes. If not executed properly, they can lead to unintended consequences and ruin the customer experience. Therefore, before adding new systems, you must understand and quantify why customers are contacting you and identify where systems can enhance the customer journey and reduce cost.

What AI tools are there for text analysis

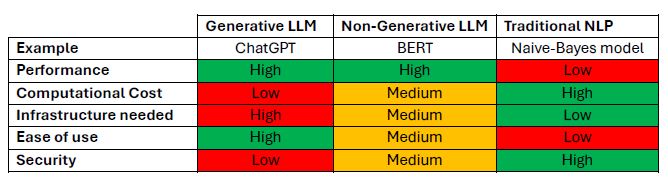

Various AI approaches are available to address a wide range of problems. We can categorise them as follows:

- Generative LLMs: Examples include GPT-4 (ChatGPT), Gemini, and Claude. These are the models that excel at generating content e.g. summarising a customer call.

- Non-generative LLMs such as BERT, RoBERTa and their various forms: These models are used extensively in applications that require deep understanding of context or meaning e.g. accurately classifying known topics for a customer call.

- Traditional NLP techniques: This category encompasses rule-based systems, Word2Vec, and more. They work well with simpler tasks. E.g. detecting if a particular service is mentioned in a customer webchat.

What’s the difference between generative and non-generative LLMs?

Fundamentally, LLMs like GPT-4 and BERT are built from the same building blocks called transformers, so what makes them differ?

Typically, a transformer is comprised of both Encoder and Decoder parts, but it’s been found that models can be specialised through stacking either encoder or decoder blocks. GPT-4, a generative LLM, is often referred to as a decoder-only architecture. This allows the model to receive an input, then generate text that is contextually relevant to the input. Not only does it mimic human-like text, but these responses can also be seemingly creative.

BERT, on the other hand, is built using encoder-only architecture, so think of it as a specialist in both reading and interpreting human language, rather than generating it. Non-generative LLMs, when utilised effectively, offer considerable power without a lot of the overheads associated with the generative LLMs. While some infrastructure is necessary for their implementation, the costs are not prohibitively high, especially when employing distilled models. For instance, users can avoid making expensive high frequency API calls to generative LLMs or using extensive computational resources. Additionally, users have greater control over model customisation, allowing them to achieve optimal performance for domain-specific tasks. These advantages make non-generative LLMs an excellent choice for handling highly sensitive data within a secure, isolated system e.g. a client’s secure inhouse database and system.

The following table offers a high-level comparison of the different NLP tools:

Are traditional NLP techniques still relevant?

Although LLMs are highly adaptable and have great performance across a wide-range of tasks, traditional NLP techniques remain relevant due to their task-specific tunability. These methods have been in use for decades and continue to play a crucial role in various niche applications. Traditional approaches often benefit from cost-effective compute resources and specificity, but they require more manual tuning to achieve optimal results, and typically only work well on low-complexity tasks. In general, these techniques are better-suited for curated, lower-performance internal systems, where they can carry-out dedicated automated tasks inside a pipeline.

Intent classification in action

Back to our customer service example – using a combination of NLP techniques, generative, and non-generative LLMs, we can identify the intent of customers when speaking to customer service operators.

In the first instance, we can apply quick traditional NLP methods to identify if this alone is suitable for our task. However, due to the complexity of customer interactions, it is unlikely that this will produce robust results. The next step would be to employ a generative LLM on a subset of calls to identify intent topics. While this may provide sufficient insights to enhance the customer journey, for truly informed business decisions, it is essential to gain a holistic understanding. Therefore, quantifying the number of calls related to each topic might be of interest.

To quantify the number of calls it is best to use a non-generative LLM like BERT, as they will outperform their generative counter parts, are much cheaper and far easier to implement at scale. Previously we have had great results using these types of techniques and methodologies in a range of different projects.

How CACI can help

If you’re looking to enhance your business with cutting-edge NLP solutions, our in-house data science teams are here to help. Contact us today to start transforming your use of data and stay ahead in the ever-evolving landscape of AI and data science.